Chatbots powered by artificial intelligence (AI) have transformed how the hospitality industry delivers customer service and support. AI chatbots can handle everything from answering common FAQs to making reservations to resolving guest issues 24/7. However, as these conversational AI assistants grow more advanced, there is an increasing need for explainable AI capabilities.

What is Explainable AI?

Explainable AI (XAI) refers to AI models and techniques that provide transparency into the underlying logic, rationale, and decision-making process. Rather than being opaque "black boxes", explainable AI chatbots can generate human-understandable explanations for their outputs or recommendations. Various XAI techniques exist like feature visualization, counterfactual scenarios, and metrics for accuracy, relevance, and truthfulness.

Why Explainable Chatbots are Crucial for Hospitality

In the hospitality space where brands live and die by customer experience and trust, having transparent and interpretable AI chatbots is essential:

- Build Confidence: When AI chatbots influence guests' reservations, pricing, recommendations and more, brands that use this AI will want to understand the chatbot's reasoning and decision-making process. Explainable chatbots provide that transparency, which can build trust and confidence in the technology and the brand itself.

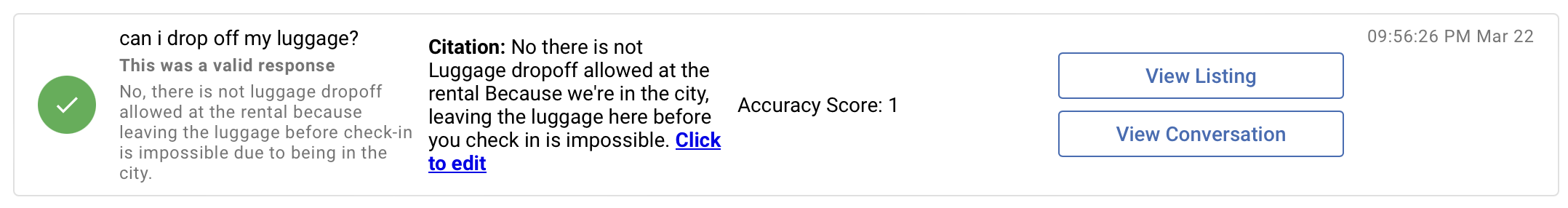

- Understand where responses are coming from: One of the biggest questions users have when implementing chat AI is "where are these responses coming from" and "what content is being used to generate these responses". Explainable AI allows each AI generated responses to be accompanied by the relevant citations and documents which were used to generate those responses. At Yada, we provide our users with a running log of every AI event that happens on the platform allowing them to understand and monitor their AI's behavior in real time.

- Transparency and Trust By clearly citing the sources used to generate an AI response, it provides transparency into the information and data that informed the output. This transparency helps build trust with the end user, demonstrating that the AI is not just making up information, but drawing from factual, verifiable sources, and providing users with an easy way to modify and control those sources.

- Accountability and Oversight: Having full citations allows for accountability and oversight of the AI system's knowledge base and background data. If any inaccurate, biased or problematic sources are identified in the citations, they can be reviewed and modified by the user.

- Providing Avenues for Further Content Expansion: Clear citations allow users of the AI system to easily identify and follow up on the original sources for additional context, fact-checking or deeper explanations and content if needed. It enriches the knowledge trail rather than treating the AI output as a final destination.

- Accuracy Scores: These serve as a key evaluation metric for gauging the effectiveness and quality of AI systems. They help assess the proximity of the AI's output to the actual or desired output.

If an AI system does not have these features, than it is not an explainable system. As a user, without access to these metrics and citations, you are essentially trusting a black box with no explainability with critical guest communications and potentially private and personally identifiable information.

As AI continues to proliferate the hospitality space, it is important to differentiate between systems that are thin "wrappers" on top of OpenAI, and systems which are built from the ground up to deliver explainability and quantifiable metrics, and which run on private, on premise models to ensure complete privacy and isolation of user data from third party models and systems.

How does Yada implement Explainable AI?

At Yada, we believe artificial intelligence can be a powerful tool for enhancing the guest experience - but only if that AI is transparent and accountable. That's why we've pioneered innovative approaches to make our AI guest messaging chatbot not only highly capable, but also explainable and quantifiable.

Implementing Explainable AI: One of our core principles is providing visibility into how our chatbot operates and made its decisions. We embed explainable AI (XAI) techniques so users understand the rationale behind the chatbot's responses.

For example, let's say a guest asks about good restaurants for a date night dinner. Our chatbot will not only provide personalized recommendations, but also expose the key factors that influenced those recommendations. This could include:

- Guest preferences: Based on your prior stated cuisine preferences for Italian food and romantic ambiance

- Guest context: Considering your location near downtown and this evening's timeframe

- Restaurant data: I analyzed reviews, pricing, amenities and availability at highly-rated local restaurants

The chatbot visually surfaces the feature importance and decision logic through model-agnostic explanations. This allows users to validate whether the AI's reasoning aligned with their recommendations and existing data.

We also enable users to get quantitative explanations of how accurate AI responses are. Each and every AI response is accompanied with scores that show on a percentage basis how similar an AI response use to a users data sets and content. This allows users to control the safety thresholds of the bot and allows users to see where in their content they can add additional data and information.

Our team of data scientists and conversational AI experts is continuously working to enhance our explainable AI capabilities and identify new forms of transparent communication.

Quantifying the AI's Impact In addition to qualitative explainability, we also focus on quantifying and measuring how our AI chatbot delivers value across key performance metrics. We deploy rigorous quantifiable AI processes to track metrics like:

- Guest Satisfaction: Pre/post NPS and CSAT scores compared to non-AI guest service

- Productivity Gains: Time and cost savings compared to human agents handling the same queries

- Revenue Impact: Direct increases in revenue influenced by the chatbot's recommendations

- Accuracy: Precision of the chatbot's responses as validated by human raters and as compared against a users content.

We establish tight feedback loops to measure the AI's quantifiable impact, rapidly identify areas for improvement, and keep enhancing our machine learning models over time. We are transparent about sharing these performance metrics, both with customers and within our internal teams.

Trust through Verifiable AI Whether through explainable or quantifiable AI, our goal is to make our guest messaging chatbot trustworthy by ensuring its responses and outputs are interpretable, measurable and verifiable. We believe this builds justified confidence in AI as a service enhancement, rather than treating it as an opaque or blind decision-maker.

As AI continues to evolve, Yada remains committed to pushing the boundaries of transparent and accountable AI that provides true value to both guests and hospitality businesses. We welcome feedback as we continue pioneering new frontiers in this space.

%20in%20Hospitality.jpg)